using the veos router on kvm and esxi

This chapter describes the system requirements, installation, and configuration procedures for veos router on hypervisor.

Server

A server can be either a hardware or software entity.

A hardware server is the physical computer that executes the virtual machine manager or hypervisor and all the virtual machines, also known as the host machine.

A software server is the hypervisor or virtual machine manager that hosts and manages the virtual machines. It is also sometimes referred to as the host.

VMware esxi Minimum Server Requirements

x86-64 Server class CPU (32-bit CPUs are not supported) with

- Ethernet NICs must be SR-IOV capable

- BIOS / System Firmware support for SR-IOV

- 8 GB free disk space

- 16 GB RAM

- 4 cores running a minimum 2.4GHz or greater and 16 GB memory

- Intel VT-x and VT-d support

- Ethernet NICs must be SR-IOV capable

- BIOS / System Firmware support for SR-IOV

kvm Requirements

veos is must be deployed on an x86-64 architecture server running kvm hypervisor.

kvm Minimum Server Requirements

8 GB free disk space

16 GB RAM

x86-64 Server class CPU (32-bit CPUs are not supported) with

- Intel VT-x or AMD-V support for CPU Virtualization

- Intel VT-d or AMD-IOMMU support for PCIe passthrough

- Intel AES-NI support

- 4 CPU cores running at 2.4GHz.

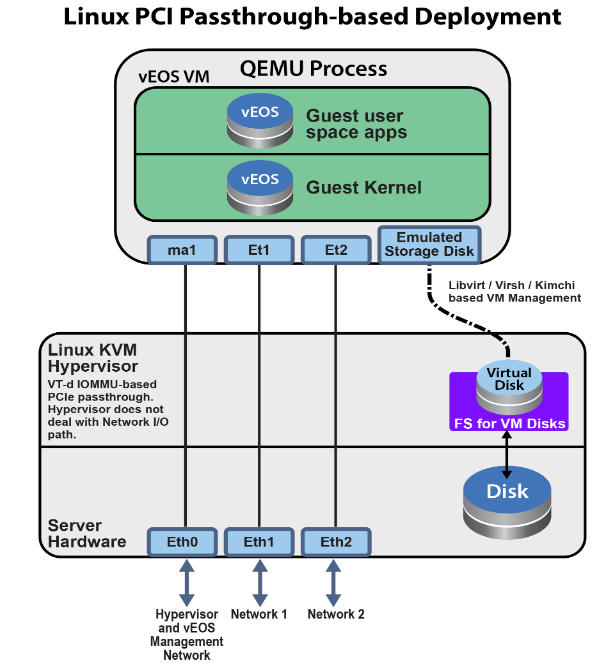

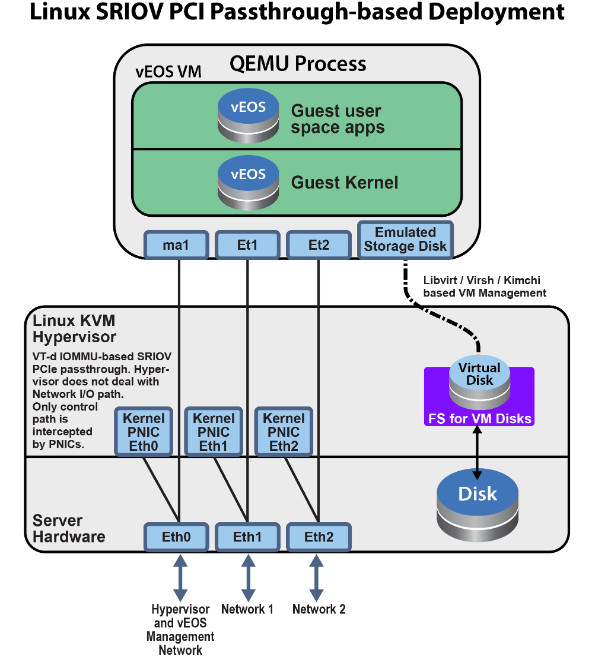

kvm SR-IOV Based Deployment

- Ethernet NICs must be SR-IOV capable

- BIOS / System Firmware support for SR-IOV

Supported Topologies

the following scenarios are described in the Hypervisor Chapter

- Launching esxi using vSphere Web Client

- Launching veos on kvm with Linux bridge

- Launching veos on kvm with SR-IOV

- Launching veos on kvm with PCI-Passthrough

VMware esxi Hypervisor

Describes the launch sequence for VMware esxi 6.0 and 6.5.

Launching VMware esxi 6.0 and 6.5

How to launch VMWare esxi 6 and esxi 6.5 for veos.

there are different esxi user interfaces for managing the esxi host, such as the vSphere Web Client and the esxi Web Client. the following task is required to launch VMware 6.0 and 6.5 and provides a general guideline on the steps involved in deploying virtual machines with an OVF/OVA template.

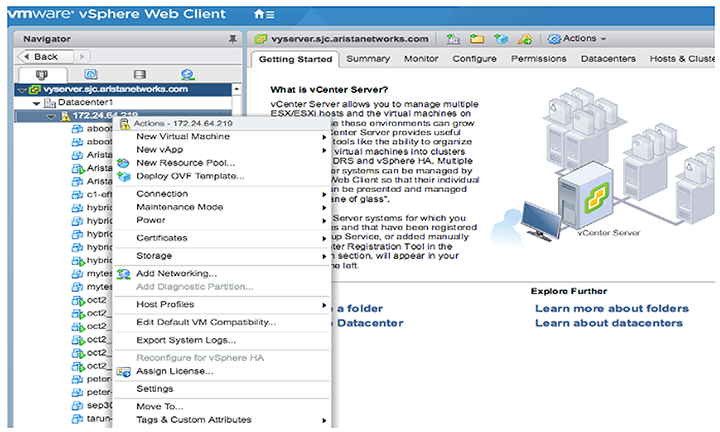

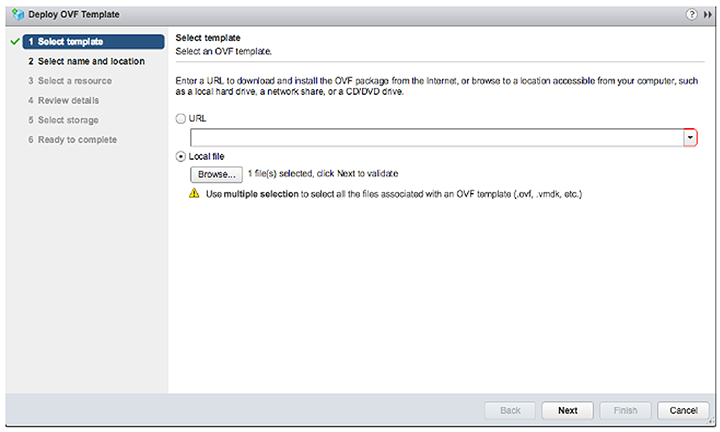

1. From the vCenter Server WEB-UI navigator, select Deploy OVF template.

2. Select the OVA file from the local machine.

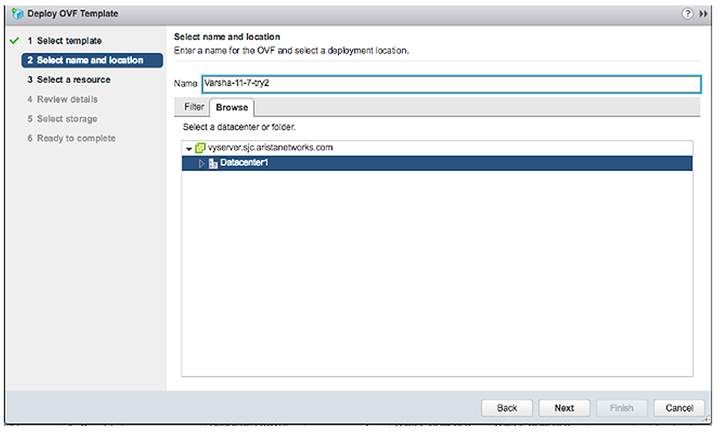

3. Select the name and location for veos deployment.

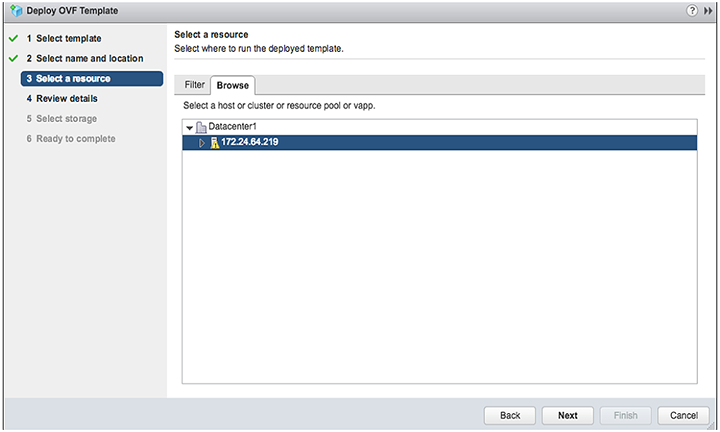

4. Select the host, cluster, resource pool or VAPP.

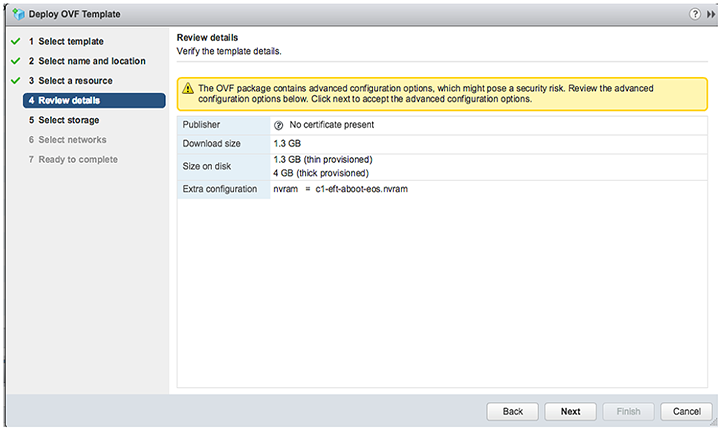

5. Verify the template details.

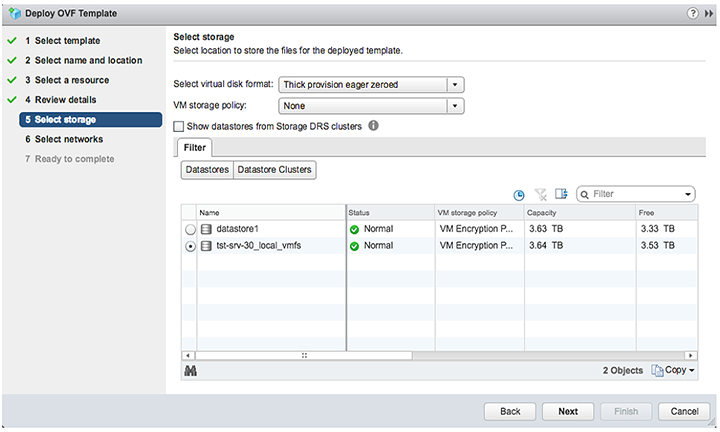

6. Select Thick provision eager zeroed from the datastore.

7. Select the default network.

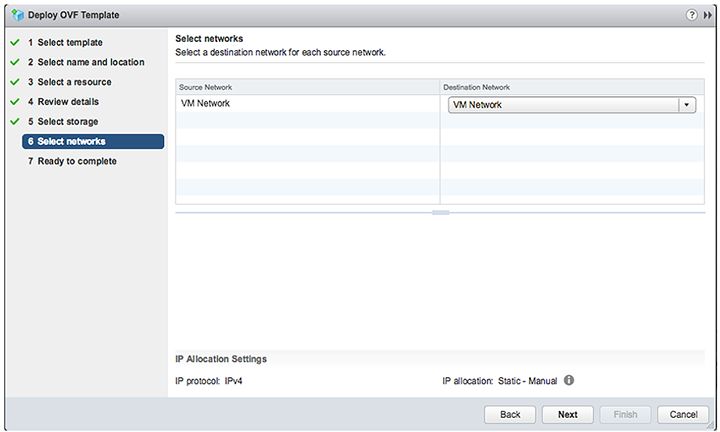

8. Complete the launch process.

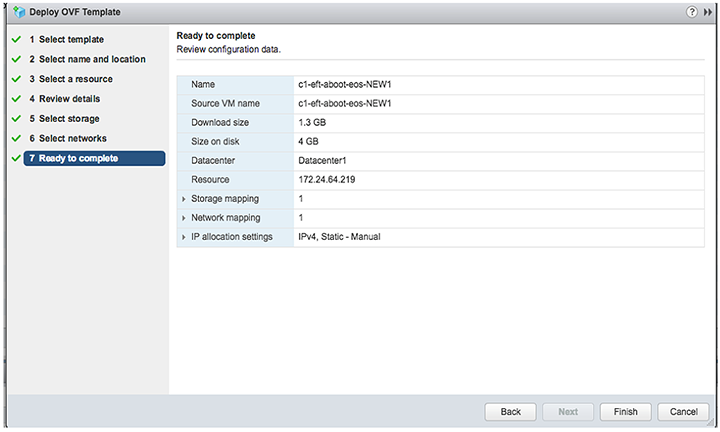

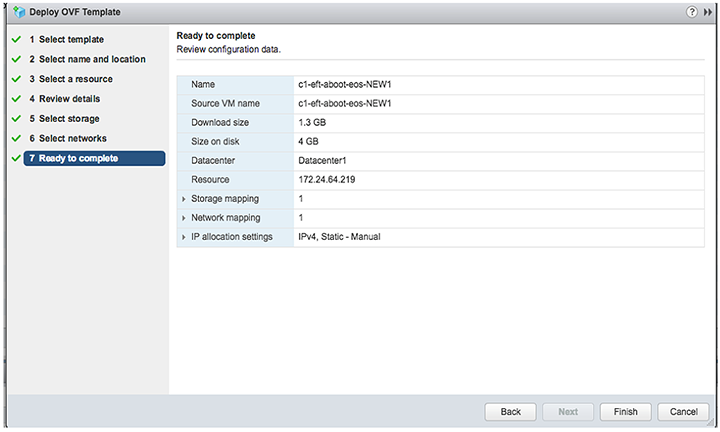

9. Under the Recent Tasks tab at the bottom of the page, the progress of deployment displays. Once the deployment is complete, power-on the machine.

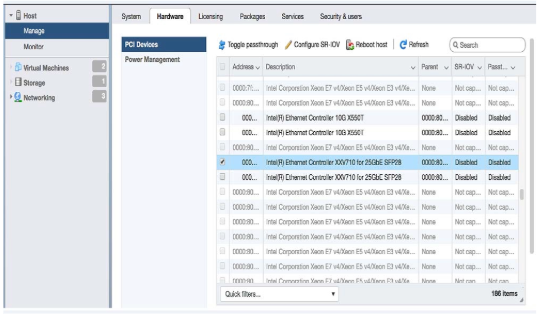

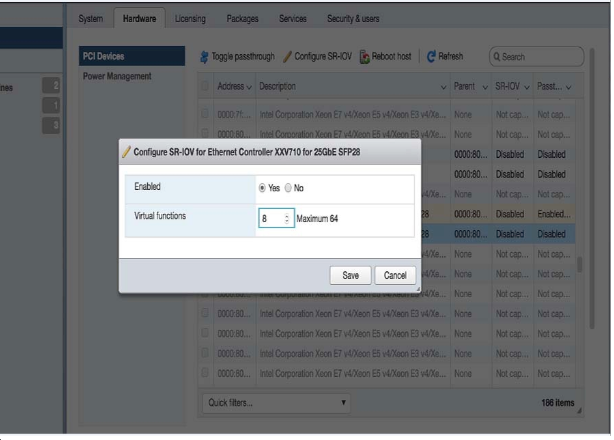

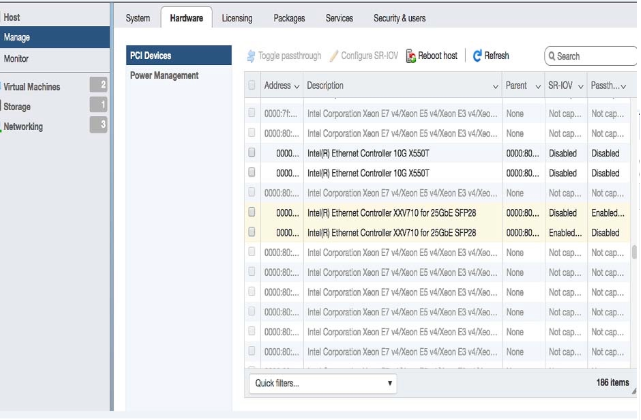

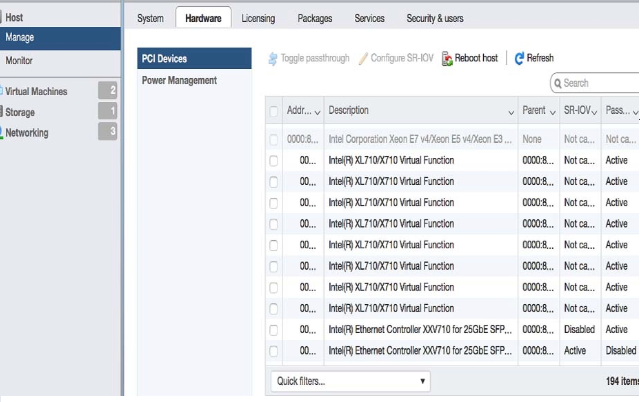

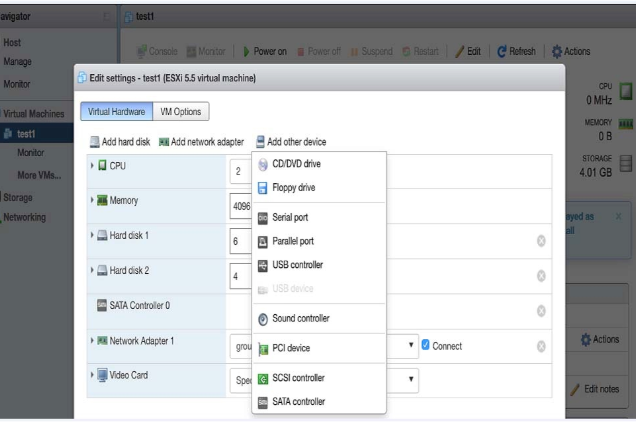

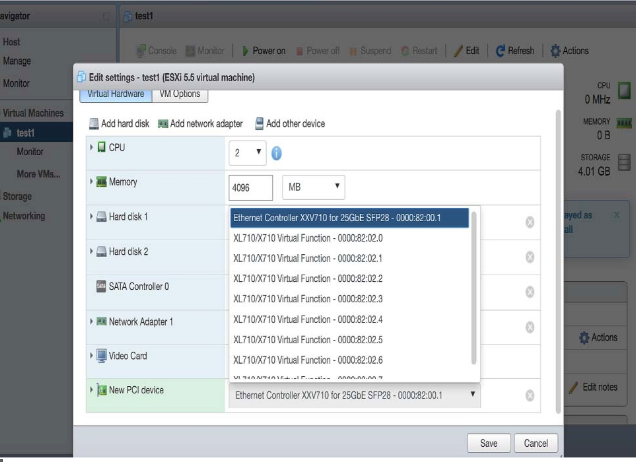

Enabling SR-IOV or PCI Passthrough on esxi

Describes how to enable single route input/output vitalization (SR-IOV) or PCI passthough on VMware esxi.

To enable SR-IOV or PCI passthrough on esxi, complete the following steps.

kvm

This section describes the system requirements, installation and configuration procedures for CloudEOS and veos.

Server

A server can be either a hardware or software entity.

A hardware server is the physical computer that executes the virtual machine manager or hypervisor and all the virtual machines. This is also known as the host machine.

A software server is the hypervisor or virtual machine manager that hosts and manages the virtual machines. It is also sometimes referred to as the host. In this document specifically, the software server is comprised of RedHat Linux with virtualization support (kvm).

System Requirements

Below are the minimum system requirements for using kvm.

Minimum Server Requirements

Any VMware supported esxi server hardware.

Hypervisor support- RedHat 7x with virtualization support. Please see below for virtualization https://wiki.centos.org/HowTos/kvm.

- Libvirt is installed by executing virsh list which should return without errors. Python 2.7+ is required to run the installation script vSphere 6.0.

Minimum requirements:

- 2 vCPUs

- 4GB Memory

- 8G Free disk space

Maximum capacities

- 16 vCPUs

- 8 network interfaces

| Image Name | File Name | Details |

| kvm veos image | EOS.qcow2 | Image Hard Disk that contains veos. This file can grow as agents in veos generates logs/traces, etc. |

using Libvirt to Manage CloudEOS and veos VM on kvm

Libvirt is an open source library which provides CloudEOS and veos management of Virtual Machines.

Libvirt supports many functions such as creation, update, and deletion and of VMs.

the complete Libvirt command reference can be found at http://libvirt.org/virshcmdref.html

Define a new VM

Define a domain from an XML file, by using the virsh define <vm-definition-file.xml > command. This defines the domain, but it does not start the domain.the definition file has vm-name, CPU, memory, network connectivity, and a path to the image. the parameters can be found at https://libvirt.org/formatdomain.html. there is a sample CloudEOS and veos file in the example below.

Undefine the Inactive Domain

Undefine the configuration for the inactive domain by using the virsh undefine <vm-name> and specifying its domain name.Start VM

Start a previously defined or inactive domain by using the virsh start <vm-name> command.

Stop VM

Terminate a domain immediately by using the virsh destroy <vm-name> command

Managing Networks

the XML definition format for networks is defined at https://libvirt.org/formatnetwork.html. these commands are similar to the VM, but with a prefix 'net-' :

the virsh net-define <network-definition-file.xml> command.

the virsh net-undefine network-name command removes an inactive virtual network from the libvirt configuration.

the virsh start network-name command manually starts a virtual network that is not running.

the virsh destroy network-name command shuts down a running virtual network.

Launching veos in LinuxBridge Mode

Use the script SetupLinuxBridge.pyc usage python SetupLinuxBridge.pyc <bridge- name>

Cut and paste the following XML template into a file (veos.xml) and customize the elements that are in bold below.

- virsh define <veos define file say veos.xml>

- virsh start <veos-name>

- virsh console <veos-name>

<domain type='kvm'>

<!-- veos name, cpu and memory settings -->

<name>kvs1-veos1</name>

<memory unit='MiB'>4096</memory>

<currentMemory unit='MiB'>4096</currentMemory>

<vcpu placement='static'>2</vcpu>

<resource>

<partition>/machine</partition>

</resource>

<cpu mode='host-model'/>

<os>

<type arch='x86_64'>hvm</type>

<boot dev='cdrom'/>

<boot dev='hd'/>

</os>

<features>

<acpi/>

<apic/>

<pae/>

</features>

<clock offset='utc'/>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>restart</on_crash>

<devices>

<emulator>/usr/bin/qemu-system-x86_64</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='directsync'/>

<source file='/path_to_file/CloudEOS.qcow2'/>

<target dev='hda' bus='ide'/>

<alias name='ide0-0-0'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

<disk type='file' device='cdrom'>

<driver name='qemu' type='raw'/>

<source file='/path_to_file/Aboot-veos-serial.iso'/>

<target dev='hdc' bus='ide'/>

<readonly/>

<alias name='ide0-1-0'/>

<address type='drive' controller='0' bus='1' target='0' unit='0'/>

</disk>

<controller type='usb' index='0'>

<alias name='usb0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/>

</controller>

<controller type='pci' index='0' model='pci-root'>

<alias name='pci0'/>

</controller>

<controller type='ide' index='0'>

<alias name='ide0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<!-- In this case management is connected to linux bridge -->

<interface type='bridge'>

<source bridge='brMgmt'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

<serial type='pty'>

<source path='/dev/pts/4'/>

<target port='0'/>

<alias name='serial0'/>

<target port='0'/>

<alias name='serial0'/>

</serial>

<console type='pty' tty='/dev/pts/4'>

<source path='/dev/pts/4'/>

<target type='serial' port='0'/>

<alias name='serial0'/>

</console>

<input type='mouse' bus='ps2'/>

<graphics type='vnc' port='5903' autoport='yes' listen='127.0.0.1'>

<listen type='address' address='127.0.0.1'/>

</graphics>

<video>

<model type='cirrus' vram='9216' heads='1'/>

<alias name='video0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/>

</video>

<memballoon model='virtio'>

<alias name='balloon0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</memballoon>

<!-- Has two data ports on different vlans

Cut and paste the more interface elements for more interfaces but increment the slot number.

Note that brWAN and brLAN bridges need to be created beforehand -->

<interface type='bridge'>

<source bridge='brWAN'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='5' function='0x0'/>

</interface>

<interface type='bridge'>

<source bridge='brLAN'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='6' function='0x0'/>

</interface>

</devices>

</domain>Example Deployment

VIRTIO & Linux Bridging Deployment

veos can employ para-virtualized network I/O interfaces, which in Linux kvm is also known as Virtio . Each NIC is connected to a unique underlying Linux layer-2 bridge in the hypervisor which in-turn provides access to an uplink.

In this example,

- Ethernet1 connects to the physical Ethernet port that connects to the WAN through a LinuxBridge. the router is configured with a WAN IP address on this port.

- Ethernet2 connects to the physical ethernet port that connects to the LAN through a LinuxBridge.

- Server IP address in the diagram is assumed to be configured on the LAN LinuxBridge device.

Note: Arista recommends using Ethernet1 for WAN and Ethernet2 for LAN. However, any veos port can be used.

Setting Up the Host for Single Root I/O Virtualization (SR-IOV)

Single Root I/O Virtualization (SR-IOV) allows a single PCIe physical device under a single root port to appear to be multiple physical devices to the hypervisor.

the following tasks are required to set up the host for SR-IOV.

1. Verify the IOMMU Support.

Use the virt-host-validate Linux command to check IOMMU (input/output memory management unit) support. If it does not "PASS" for IOMMU, check the BIOS setting and kernel settings.

the example below is what should be displayed.

[arista@solution]$ virt-host-validate

QEMU: Checking for device assignment IOMMU support : PASS

QEMU: Checking if IOMMU is enabled by kernel : PASS2. Verify the Drivers are Supported.

Ensure the PCI device with SR-IOV capabilities is detected. In the example below, an INTEL 82599 ES network interface card is detected which supports SR-IOV.

Verify the ports and NIC IDs that are in bold in the lspci | grep Ethernet Linux command output below.

# lspci | grep Ethernet

01:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

01:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

01:00.2 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

01:00.3 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

81:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

81:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

81:00.2 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

81:00.3 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

82:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

82:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

83:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

83:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)After confirming the device support, the driver kernel module should load automatically by the kernel. To verify the driver kernel is active, use the lsmod | grep igb Linux command.

[root@kvmsolution]# lsmod | grep igb

igb 1973280

ptp192312 igb,ixgbe

dca151302 igb,ixgbe

i2c_algo_bit 134132 ast,igb

i2c_core 407566 ast,drm,igb,i2c_i801,drm_kms_helper,i2c_algo_bit4. Activate Virtual Functions (VFs).

the maximum number of supported virtual functions depends on the type of card. To activate the VFs use [arista@localhost]$ /sys/class/net/<Device_Name>/device/sriov_numvfs or the method shown in the example below, it shows that the PF identifier 82:00.0 supports a total of 63 VFs.

Example

[arista@localhost]$ cat/sys/bus/pci/devices/0000\:82\:00.0/sriov_totalvfs 63To activate the seven VFs per PFs and make them persistent after reboot, add the line options igb max_vfs=7 in ixgbe.conf and the sriov.conf files in /etc/modprobe.d

Use the rmmod ixgbe and modprobe ixgbe Linux commands to unload and reload the module.

5. Verify the VFs are detected.

Verify the VFs are detected by using the lspci | grep Ethernet Linux command. For the two identifiers 82:00.0 and 82:00.1, 14 VFs are detected.

# lspci | grep Ethernet

82:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

82:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

82:10.0 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:10.1 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:10.2 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:10.3 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:10.4 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:10.5 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:10.6 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:10.7 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:11.0 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:11.1 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:11.2 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:11.3 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:11.4 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)

82:11.5 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)6. Locate the serial numbers for the PFs and VRFs.

Locate the serial numbers for the PFs and VFs. the Linux virsh nodedev-list | grep 82 command below displays the serial number for identifiers 82:00.0 and 82:00.1. the first two numbers are the serial numbers for the PFs and the remaining are the serial numbers for the VFs.

# virsh nodedev-list | grep 82

pci_0000_82_00_0

pci_0000_82_00_1

pci_0000_82_10_0

pci_0000_82_10_1

pci_0000_82_10_2

pci_0000_82_10_3

pci_0000_82_10_4

pci_0000_82_10_5

pci_0000_82_10_6

pci_0000_82_10_7

pci_0000_82_11_0

pci_0000_82_11_1

pci_0000_82_11_2

pci_0000_82_11_3

pci_0000_82_11_4

pci_0000_82_11_57. Select the serial number of the VF.

Select the serial number of the VF that will attach to the VM (veos). using the Linux virsh nodedev-dumpxml <serial number> command, locate the bus, slot, and function parameters. For example, serial number: pci_0000_82_11_1 displays the following details.

# virsh nodedev-dumpxmlpci_0000_82_11_1

<device>

<name>pci_0000_82_11_1</name>

<path>/sys/devices/pci0000:80/0000:80:02.0/0000:82:11.1</path>

<parent>computer</parent>

<driver>

<name>ixgbevf</name>

</driver>

<capability type='pci'>

<domain>0</domain>

<bus>130</bus>

<slot>17</slot>

<function>1</function>

<product id='0x10ed'>82599 Ethernet Controller Virtual Function</product>

<vendor id='0x8086'>Intel Corporation</vendor>

<capability type='phys_function'>

<address domain='0x0000' bus='0x82' slot='0x00' function='0x1'/>

</capability>

<iommuGroup number='71'>

<address domain='0x0000' bus='0x82' slot='0x11' function='0x1'/>

</iommuGroup>

<numa node='1'/>

<pci-express>

<link validity='cap' port='0' width='0'/>

<link validity='sta' width='0'/>

</pci-express>

</capability>

</device>8. Create a new Interface.

Shutdown the veos VM if it is already running. Open the XML file for the specific veos VM for editing using the Linux command virsh edit <vm-name>. In the interface section, create a new interface by adding the details as shown below. the bus, slot, and function values are in the hexadecimal format of the decimal values found in step 7.

<interface type='hostdev' managed='yes'>

<source>

<address type='pci' domain='0x0000' bus='0x82' slot='0x11' function='0x1'/>

</source>

</interface>9. Start the veos VM. Verify there is an added interface on the VM. using the command ethtool -i et9 to verify that the driver for the added interface is ixgbevf .

switch(config)#show interface status

Port NameStatus Vlan Duplex SpeedType Flags

Et9 notconnect routed unconfunconf 10/100/1000

Ma1 connectedrouted a-fulla-1G 10/100/1000

[admin@veos]$ ethtool -i et9

driver: ixgbevf

version: 2.12.1-k

firmware-version:

bus-info: 0000:00:0c.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: no

supports-register-dump: yes

supports-priv-flags: no

Launching SR-IOV

veos can also use PCIE SRI-OV I/O interfaces. Each SRI-OV NIC is passed-through to the VM such that network I/O does not hit the hypervisor. In this model, the hypervisor and multiple VMs can share the same NIC card.

SR-IOV has the following advantages over LinuxBridge:

- Higher Performance ~ 2x.

- Better latency and jitter characteristics.

- veos directly receives physical port state indications from the virtual device.

- using SR-IOV virtualize the NIC.

- the NICs have a built-in bridge to do basic bridging.

- Avoids software handling of the packets in the kernel.

Setting Up the Host and Launching PCI Pass-through

Set up a networking device to use PCI pass-through.

1. Identify Available Physical Functions.

Similar to the SR-IOV, identify an available physical function (a NIC in this scenario) and its identifier. Use the lspci | grep Ethernet Linux command to display the available physical functions.

In this example, 82:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection is the physical function and 82:00.0 is the device identification code.

# lspci | grep Ethernet

01:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

01:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

01:00.2 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

01:00.3 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

81:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

81:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

81:00.2 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

81:00.3 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

82:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

82:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

83:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

83:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)2. Verify Available Physical Functions.

Verify the available physical functions by using the virsh Linux commands.

[arista@solution]$ virsh nodedev-list | grep 82_00_0

pci_0000_82_00_0

[arista@solution]$ virsh nodedev-dumpxml pci_0000_82_00_0

<device>

<name>pci_0000_82_00_0</name>

<path>/sys/devices/pci0000:80/0000:80:02.0/0000:82:00.0</path>

<parent>pci_0000_80_02_0</parent>

<driver>

<name>vfio-pci</name>

</driver>

<capability type='pci'>

<domain>0</domain>

<bus>130</bus>

<slot>0</slot>

<function>0</function>

<product id='0x10fb'>82599ES 10-Gigabit SFI/SFP+ Network Connection</product>

<vendor id='0x8086'>Intel Corporation</vendor>

<capability type='virt_functions' maxCount='64'/>In this example, the domain is 0 (Hex domain=0x0), the bus is 130 (Hex bus=0x82), the slot is 0 (Hex slot=0x0), and function is 0 (Hex function=0x0).

With the domain, bus, slot, and function information, construct the device entry and add it into the VMs XML configuration.

<devices>

...

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x82' slot='0x00' function='0x0'/>

</source>

</hostdev>3. Verify the NIC was detected by the VM.

When starting the VM (veos in this case), the VM should detect NIC.

switch#bash

Arista Networks EOS shell

[admin@veos1 ~]$ lspci | grep Ethernet

00:03.0 Ethernet controller: Intel Corporation 82599EB 10-Gigabit SFI/SFP+ Network Connection (rev 01)

00:05.0 Ethernet controller: Red Hat, Inc Virtio network device

[admin@veos ~]$ 4. Verify Driver Requirements.

If the NIC is supported by the veos and any other driver requirements are met, the corresponding ethernet interfaces are available to use on the veos. Use the show interface command to display the available veos Ethernet interfaces.

switch#show interfacestatus

Port Name Status Vlan Duplex SpeedType Flags

Et1connectedrouted full10G10/100/1000

Ma1connectedrouted a-fulla-1G 10/100/1000

switch#bash

bash-4.3# ethtool -i et1

driver: ixgbe

version: 4.2.1-k

firmware-version: 0x18b30001

bus-info: 0000:00:03.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: no Example Deployment

veos can use passthrough I/O interfaces where the network I/O does not hit the hypervisor. In this model, the VM owns the entire network card, thus fully bypassing the hypervisor.

Setting up SR-IOV is initially more involved. Arista recommends starting out with LinuxBridge.

- SR-IOV has the following advantages over LinuxBridge Higher Performance ~ 2x

- Better latency and jitter characteristics

- veos directly receives physical port state indications from the virtual device.